Tesla's Current Autonomy Mess Makes Cybercabs a Pipedream

A look at the multitude of problems & legal challenges Tesla faces due to its half-baked "self-driving" technology; Which is why today's Robotaxi event is a sham

Topics covered in this note:

Why Tesla’s autonomous driving technology is very far from being street-legal for robotaxis.

How the US regulator’s data on Tesla Autopilot/FSD crashes and fatalities are likely understated.

Thoughts on how to trade Tesla today before its “Robotaxi” event at 19:00 PST in LA. The consensus is for a “sell-the-news” event tomorrow, but with both the bulls and bears in agreement on this, it could be the opposite.

Before watching today’s massively hyped and highly anticipated Tesla “Robotaxi” event (which was delayed by over two months), it’s crucial to understand just how dangerous and ineffective Tesla’s current autonomous driving technology is.

This is why today’s event is such a clown show, as Musk is forcing Tesla to make a high-production display of technology they’re not even close to perfecting.

For the sake of brevity, below are some bullet points and charts on key problems that Tesla’s Autopilot and Full Self-Driving (FSD) technologies are facing and why it makes today’s event about Robotaxis the ultimate farce.

Most of the data in this report is from the US National Highway Traffic Safety Administration (NHTSA), the main regulator of automobile safety and autonomous driving. NHTSA is so powerful that it has forced billion-dollar recalls at Bridgestone, Takata (which went bankrupt), Toyota, and GM.

With Tesla, however, NHTSA has behaved like a puppy exposing its belly in a show of submission (which is another report I plan to write soon). Nevertheless, even this supine US regulator has some damning data, albeit much of which is redacted by Tesla’s request.

First, a few caveats about Tesla’s robotaxi event, which was named “We, Robot”, an utterly moronic wordplay on Asimov’s short-story series, I, Robot (only Musk would do this).

Whatever is displayed today is either vaporware or a long way from mass production, whether it be a Cybercab, wireless charging systems, a Tesla “robotaxi” app, new models, etc. Note that Tesla’s “solar roof tiles” (announced in 2016) still don’t exist, the Tesla Semi (announced in 2017) is still under “development”, and the Cybertruck (announced in 2019) was delayed for 2 years yet is still riddled with so many problems that it’s had 5 recalls this year.

Tesla’s autonomous driving technology is lethal in its current state while rivals like Waymo are 50,000 times more advanced: The current autonomous driving technology Tesla has is deadly (see Figures 1 & 2) and far from being street-legal behind a Tesla robotaxi network. Waymo already has 100,000 paid trips per week and operates its fleet in 4 cities.

Tesla has yet to acquire permits to operate robotaxi fleets: All autonomous vehicle companies trying to train their fleet on public roads in California (the best testing grounds for robotaxis) need a permit from the California Department of Motor Vehicles (CA DMV) and the California Public Utilities Commission (CA PUC). While Tesla recently received a permit from the CA DMV, it hasn’t acquired one from the CA PUC, which licenses all ride-hail services in California. Tesla also has yet to register for permits in Arizona or Nevada, which also allow autonomous vehicle testing.

If Tesla’s FSD were so reliable as an autonomous driving technology, why haven’t they assumed full legal responsibility? Musk is notoriously penurious and he’s the driving force behind cutting quality controls at Tesla so that profits are higher, thereby keeping the stock at lofty valuations. If he is serious about his claims of FSD being near full autonomy, why hasn’t he taken full legal risks involved in registering all Teslas as robotaxis?

Tesla’s Autonomous Driving Technology is Deadly

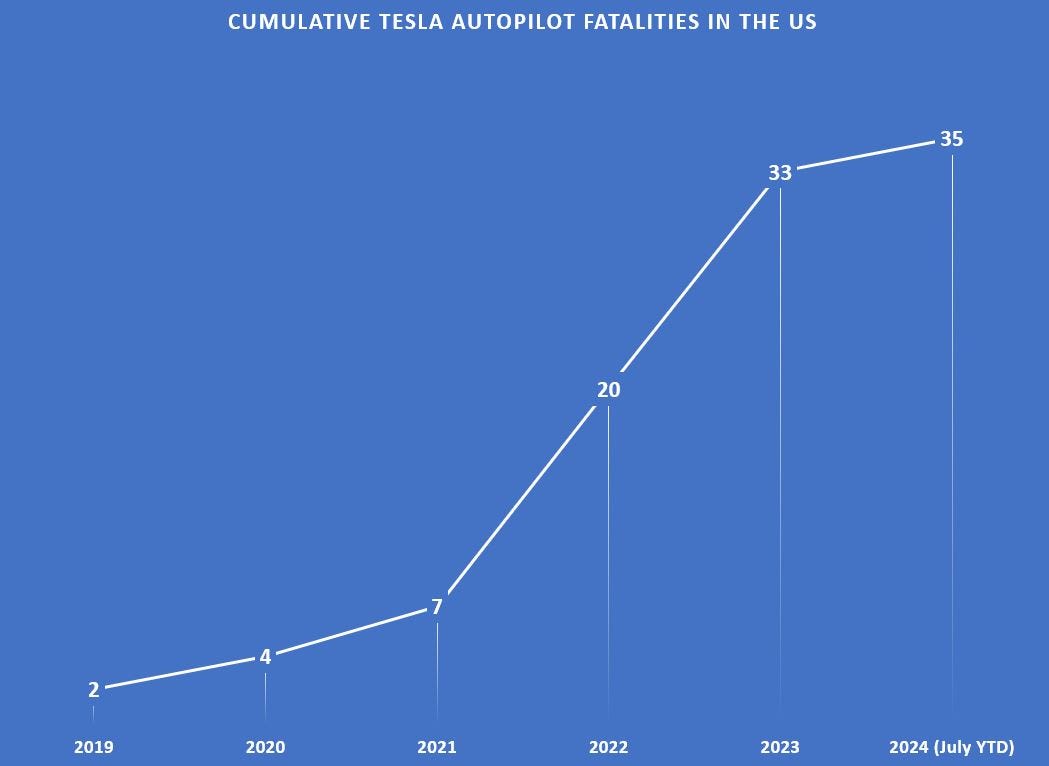

35 fatalities according to NHTSA: From June 2021, NHTSA began collecting Level 2 driver-assist data from any carmaker selling such products in the US. While the NHTSA data shows that Tesla has a total of 35 deaths from using Autopilot/FSD since 2019, the number of deaths since the investigation began full-heartedly in 2021 is 30 (see Figure 1).

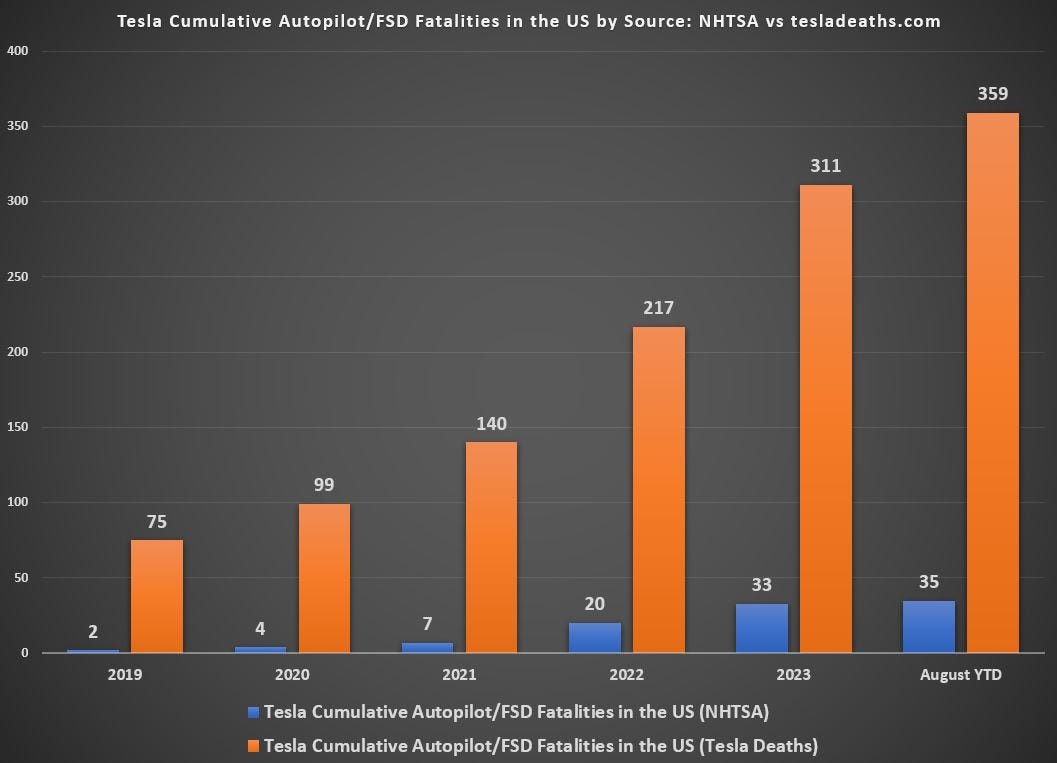

47 fatalities in reality: The website, Tesla Deaths, has a much more comprehensive count of Autopilot/FSD fatalities and has verified many of them. Its tally between 2016 and now is 44 deaths in the US and another 3 overseas. The website has been quoted by the New York Times and other media organizations (link here). The difference in the fatality count by NHTSA (which gets its data from carmakers) and Tesla Deaths (which scours the Internet and confirms incidents with local records) is astounding. Please see Figure 3 below to get a feeling of how egregiously flawed NHTSA’s data is.

An interesting point about NHTSA’s bad data is the fact that they reported only 2 Autopilot/FSD-related fatalities this year, whereas Tesla Deaths counts 49 fatalities through August 11th. The difference in the tally of fatalities between NHTSA and Tesla Deaths is shown in Figure 3.

Figure 1: Cumulative Deaths From Crashes on Autopilot/FSD

Source: NHTSA

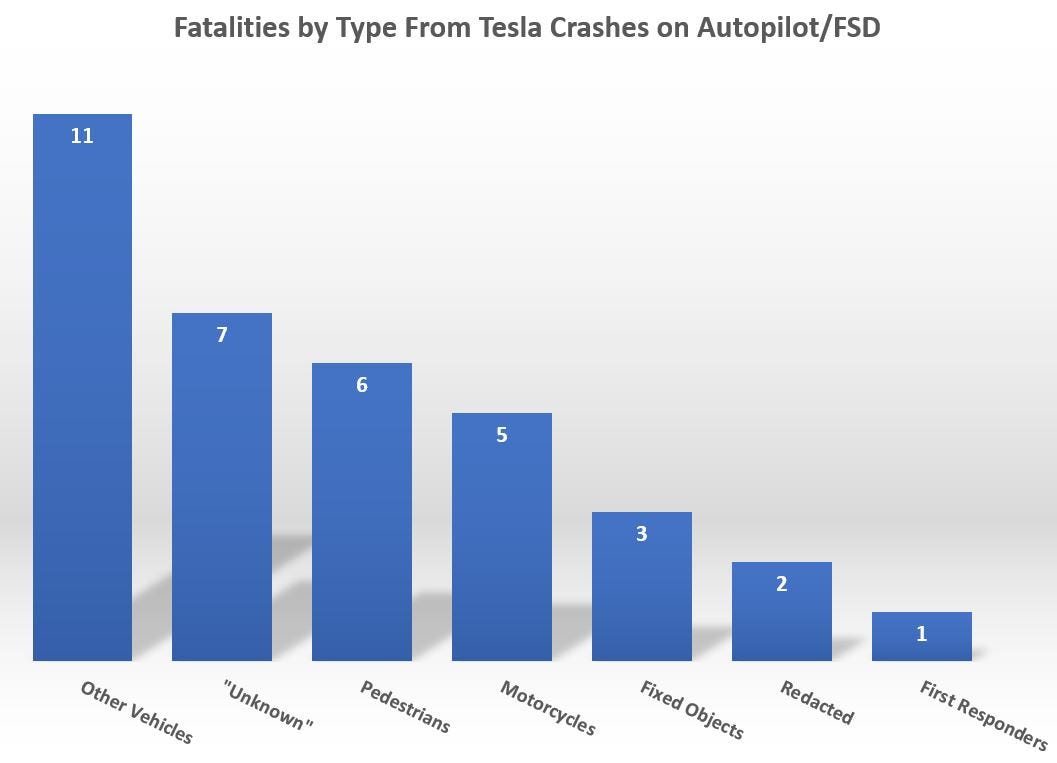

Tesla’s Autopilot has a penchant for crashing into roadside emergency responders and motorcyclists: Figure 2 shows the fatalities from Tesla crashes on Autopilot/FSD by category. While “other vehicles” stands out, it should be noted that motorcyclists and pedestrians are at the most risk:

83% of Autopilot/FSD crashes with motorcyclists end in death.

46% of Autopilot/FSD crashes with pedestrians end in death.

9% of Autopilot/FSD crashes with highway first responders end in death.

All of the above statistics could be way off because NHTSA allows Tesla to hide a lot of data. For example, note that in Figure 2 below, the categories labeled as “Unknown” and “Redacted” account for 9 (25.7%) of the 35 listed Autopilot/FSD fatalities.

Figure 2: Autopilot/FSD Deaths by Category

Source: NHTSA

Figure 3: The US Regulator Doesn’t Properly Report Tesla’s Autopilot/FSD Fatalities

Source: NHTSA & Tesla Deaths

Tesla Autopilot Aims for First Responders & Motorcycles on Highways

Tesla’s Autopilot has a high tendency to crash into first responders parked on the side of highways. Given that first responders on US highways are usually police cars or fire engines helping drivers in distress, the fact that Tesla’s Autopilot/FSD has crashed into over 11 emergency responders is astounding (9 of the incidents were during the past 2 years).

This has become a big investigation for NHTSA since 2021 as law enforcement and fire departments are in danger of doing their jobs (one police unit in California is suing Tesla for its Autopilot crash into their roadside assistance procedures in 2021).

After much pressure from the regulators, Tesla activated its in-cabin camera to monitor driver attentiveness and declared the problem was solved. However, it was a cheap fix, given Musk’s proclivity to cut corners to boost profits.

Tesla installed these cameras in all Model 3/Y cars from 2017 in preparation for robotaxis once Tesla achieved Level 5 autonomy. Musk said in 2019 that this camera would view passengers in the back seat of Tesla cars once Tesla’s robotaxi fleet was launched. Hence the positioning of the camera behind the rear-view mirror, near the cabin ceiling.

This cheap fix was a failure and will come back to haunt Tesla in future recalls for the following reasons:

9 out of 11 crashes by Autopilot/FSD into emergency responders came AFTER Tesla told NHTSA that they’d fixed the problem via an over-the-air (OTA) update.

The in-cabin camera that Tesla activated under regulatory pressure to monitor driver attentiveness is a low-quality component not used by any other carmaker.

To fix the problem would be very costly: all other carmakers who use cameras to monitor driver attentiveness have it placed around the dashboard, which is the optimal position for such devices.

For Tesla to reposition the camera from the rear-view mirror to the dashboard would be costly, not only for the labor costs but also due to how much rewiring of the car’s electrical systems would be required.

The Wall Street Journal did a brilliant investigation into this last year using previously undisclosed dashcam footage and police reports from 2021. It’s only 6 minutes long and I highly recommend watching it (link here).

Tesla Autopilot/FSD Motorcycle Crashes Kill 89% of Victims

Figure 4 shows the number of Tesla crashes on Autopilot/FSD by category. While the incidents of crashes into motorcycles is only 6, note that 5 of those accidents resulted in the deaths of those motorcycle drivers.

And while the NHTSA data is likely flawed, it’s noteworthy that both motorcycle and first responder crashes were mostly reported after Tesla told NHTSA that they fixed the problems flagged in 2021.

Figure 4: Tesla Autopilot/FSD Crashes by Object of Impact

Source: NHTSA

Tesla is Forced to Address Unfixed Autopilot/FSD Problems Highlighted by NHTSA by July 1st—Update is Nearby

After NHTSA announced a recall of 2 million Teslas in North America due to unfit monitoring of driver attentiveness in December 2023, it was once again brushed off by the market as a “nothing burger” due to Tesla announcing that it would be solved by an OTA update.

Little was fixed, as NHTSA released a scathing report in April saying that at least 20 issues that Tesla said they’d fix regarding Autopilot/FSD weren’t fixed at all. This CNBC article by their Tesla expert (link here) is worth reading just to get an idea of how egregious Tesla’s replies are to the US safety authority.

Trading Thoughts Regarding Robotaxi Day

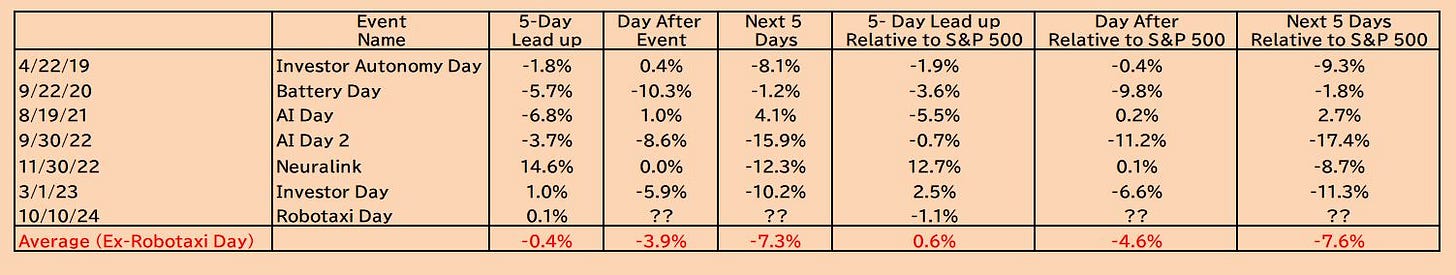

Tesla has sold off by an average of 4% in the 5 trading days after the the hyped-up events they’ve held in the past (see Figure 5).

The biggest sell-off was -11% after Battery Day in 2020 and -6% after Investor Day in 2023.

Red Flag: The fact that both the bulls and the bears think that Robotaxi Day won’t impact the share price is a huge risk flag in my opinion (i.e. one slightly positive takeaway from this event could massively spike the stock price).

Figure 5: How Tesla Trades After Big Events

Source: Bloomberg

Another great insight. Tesla is the greatest stock fraud in wall street history.

To me, the biggest elephant in the room is we are talking about a fuqing taxi. I mean they act like they are unveiling a time machine.

Given that Tesla is neither a first mover nor actually demonstrating technical equivalence to Waymo and baid, let alone technical superiority , how are the going to achieve 700 billion in robo taxi revenue. Nevermind that the entire global ride share industry is projected to generate only 218 billion in 2029, Tesla is going to somehow because of its magical effect on everyone, get the world to make that industry 700 billion, of which they will have 100 percent? Don't ask me, ask Kathy wood, she put out that batsbit crazy piece of stock fraud to pump the stock on the last clown show, the shareholder meeting.

But aside from those minor issues , my biggest issue with FSD is : who the fuq actually even wants this ? What is the scale societal problem that requires this solution? So we can browse Instagram reels on the 15 minute drive to Kroger? All you need to do is drive on the new Jersey turnpike spur at night in the rain once and realize what moron should give up control of an 80 mile and hour projectile ? What in God's name could be so important that you would take that risk? I don't give a rats asce what musk says, or the nhsta for that matter, my hands are on the wheel and eyes on the road. I can look at Instagram when I get home.

FUN FACT: Today, in Los Angeles WB CEO David Zaslav can take a Waymo-assisted Jaguar from his Beverly Hills mansion to the 20th Century Fox Studio lot, Sony, CBS Studio City, Netflix, Hulu, Amazon, Apple, Nickelodeon, Paramount, UCLA and USC Film Schools, CNN's LA studio, William Morris Endeavour, CAA, the LATimes, Spotify, the WGA, the DGA, AMPAS, and TikTok... before heading to the beach, the Petersen Automotive Museum, LACMA, a Laker/Kings game, or a movie at the Fox Village Westwood theater co-owned by Christopher Nolan, the director he chased away from WB after delivering 17 years of critically acclaimed hit movies.